In previous articles regarding OpenStack RDO installations we presented OpenStack deployments using Packstack automated installer script, which still required some post-installation networking configuration to be performed in order to accomplish whole deployment. This time we will try to prepare Packstack configuration file (answers.txt) in such way, that it does all the magic and no post-installation configuration is required. We will make full bridge:interface mapping so, that no further bridge setup is needed and the environment should be ready to use immediately after Packstack deployment.

IMPORTANT NOTE: for the purpose of this article we perform virtualized installation based on KVM. You can read more about KVM based OpenStack installation in this article: Install OpenStack on KVM – How To Configure KVM for OpenStack. Our internal traffic is based on VLANs, which send and receive VLAN tags. In KVM based installation it doesn’t matter, but if you perform installation on bare metal (real hardware) you need to use network switch supporting IEEE 802.1Q standard, otherwise the interconnectivity between Instances running on different Compute hosts within the same Tenant will not work.

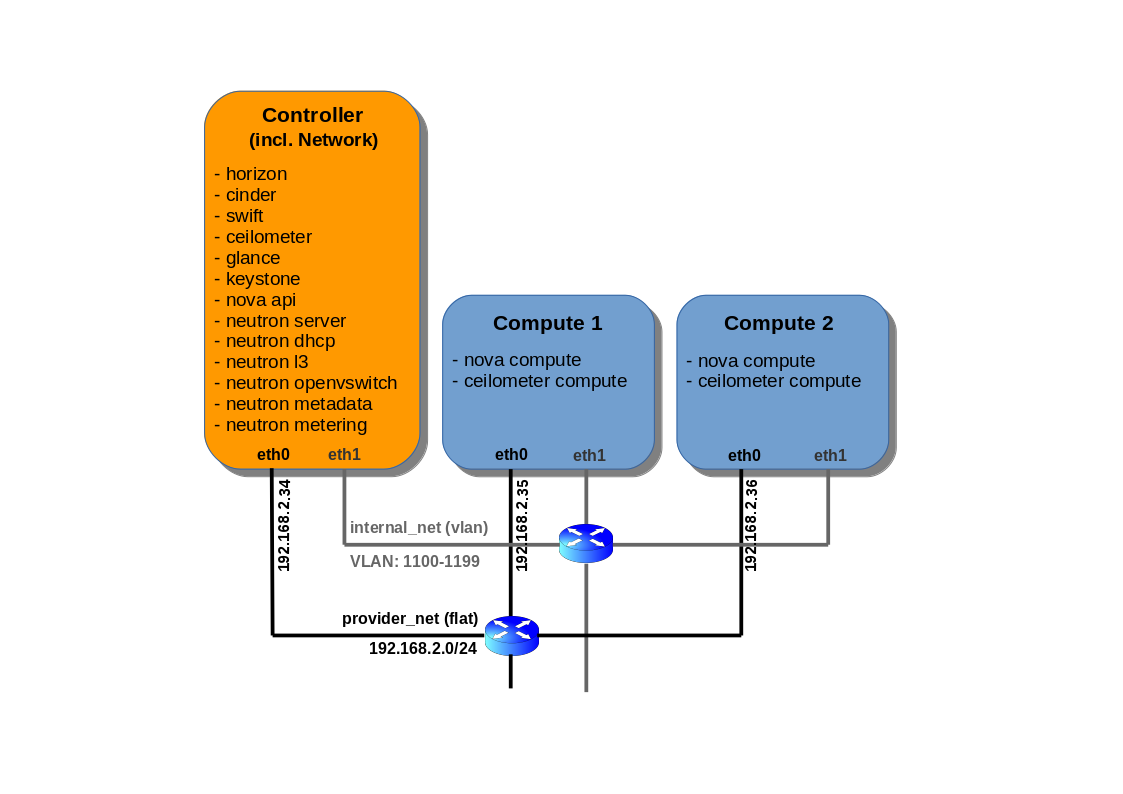

Environment Setup

Controller:

IP: 192.168.2.34 (eth0)

OS: CentOS 7 (64bit)

HW: KVM Virtual Machine

Compute 1:

IP: 192.168.2.35 (eth0)

OS: CentOS 7 (64bit)

HW: KVM Virtual Machine

Compute 2:

IP: 192.168.2.36 (eth0)

OS: CentOS 7 (64bit)

HW: KVM Virtual Machine

In our virtualized installation, as mentioned before, internal traffic (eth1 interfaces) is based on virtual isolated network (no gateway specified) inside KVM vHost, which allows VLANs. External eth0 interfaces are bridged to a Linux bridge on vHost, which is connected to a provider network (provider_net), which is a flat access network (no VLAN tagging).

1. Prerequisites for Packstack based OpenStack Pike deployment

Before we start Packstack deployment, network interfaces eth0 should have static IP configuration, config files for eth0 and eth1 interfaces, that is ifcfg-eth0 and ifcfg-eth1 should exist on all nodes in /etc/sysconfig/network-scripts/ directory and should be in state UP. NetworkManager service should be stopped and disabled on all OpenStack nodes.

Controller interfaces configuration before Packstack run:

[root@controller ~]# ip a

1: lo: mtu 65536 qdisc noqueue state UNKNOWN qlen 1

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 52:54:00:6b:55:21 brd ff:ff:ff:ff:ff:ff

inet 192.168.2.34/24 brd 192.168.2.255 scope global eth0

valid_lft forever preferred_lft forever

inet6 fe80::5054:ff:fe6b:5521/64 scope link

valid_lft forever preferred_lft forever

3: eth1: mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 52:54:00:53:af:12 brd ff:ff:ff:ff:ff:ff

inet6 fe80::5054:ff:fe53:af12/64 scope link

valid_lft forever preferred_lft forever

[root@controller ~]# ip route show

default via 192.168.2.1 dev eth0

169.254.0.0/16 dev eth0 scope link metric 1002

169.254.0.0/16 dev eth1 scope link metric 1003

192.168.2.0/24 dev eth0 proto kernel scope link src 192.168.2.34

[root@controller ~]# systemctl status NetworkManager

● NetworkManager.service - Network Manager

Loaded: loaded (/usr/lib/systemd/system/NetworkManager.service; disabled; vendor preset: enabled)

Active: inactive (dead)

Docs: man:NetworkManager(8)

[root@controller ~]# cat /etc/sysconfig/network-scripts/ifcfg-eth0

TYPE=Ethernet

BOOTPROTO=none

DEFROUTE=yes

PEERDNS=yes

NAME=eth0

DEVICE=eth0

ONBOOT=yes

IPADDR=192.168.2.34

PREFIX=24

GATEWAY=192.168.2.1

DNS1=8.8.8.8

DNS2=8.8.4.4

[root@controller ~]# cat /etc/sysconfig/network-scripts/ifcfg-eth1

TYPE=Ethernet

BOOTPROTO=none

DEFROUTE=no

NAME=eth1

DEVICE=eth1

ONBOOT=yesCompute1 interfaces configuration before Packstack run:

[root@compute1 ~]# ip a

1: lo: mtu 65536 qdisc noqueue state UNKNOWN qlen 1

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 52:54:00:15:d3:ce brd ff:ff:ff:ff:ff:ff

inet 192.168.2.35/24 brd 192.168.2.255 scope global eth0

valid_lft forever preferred_lft forever

inet6 fe80::5054:ff:fe15:d3ce/64 scope link

valid_lft forever preferred_lft forever

3: eth1: mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 52:54:00:ad:8f:ff brd ff:ff:ff:ff:ff:ff

inet6 fe80::5054:ff:fead:8fff/64 scope link

valid_lft forever preferred_lft forever

[root@compute1 ~]# ip route show

default via 192.168.2.1 dev eth0

169.254.0.0/16 dev eth0 scope link metric 1002

169.254.0.0/16 dev eth1 scope link metric 1003

192.168.2.0/24 dev eth0 proto kernel scope link src 192.168.2.35

[root@compute1 ~]# systemctl status NetworkManager

● NetworkManager.service - Network Manager

Loaded: loaded (/usr/lib/systemd/system/NetworkManager.service; disabled; vendor preset: enabled)

Active: inactive (dead)

Docs: man:NetworkManager(8)

[root@compute1 ~]# cat /etc/sysconfig/network-scripts/ifcfg-eth0

TYPE=Ethernet

BOOTPROTO=none

DEFROUTE=yes

PEERDNS=yes

NAME=eth0

DEVICE=eth0

ONBOOT=yes

IPADDR=192.168.2.35

PREFIX=24

GATEWAY=192.168.2.1

DNS1=8.8.8.8

DNS2=8.8.4.4

[root@compute1 ~]# cat /etc/sysconfig/network-scripts/ifcfg-eth1

TYPE=Ethernet

BOOTPROTO=none

DEFROUTE=no

NAME=eth1

DEVICE=eth1

ONBOOT=yesCompute2 interfaces configuration before Packstack run:

[root@compute2 ~]# ip a

1: lo: mtu 65536 qdisc noqueue state UNKNOWN qlen 1

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 52:54:00:cd:7d:b8 brd ff:ff:ff:ff:ff:ff

inet 192.168.2.36/24 brd 192.168.2.255 scope global eth0

valid_lft forever preferred_lft forever

inet6 fe80::5054:ff:fecd:7db8/64 scope link

valid_lft forever preferred_lft forever

3: eth1: mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 52:54:00:6a:0a:47 brd ff:ff:ff:ff:ff:ff

inet6 fe80::5054:ff:fe6a:a47/64 scope link

valid_lft forever preferred_lft forever

[root@compute2 ~]# ip route show

default via 192.168.2.1 dev eth0

169.254.0.0/16 dev eth0 scope link metric 1002

169.254.0.0/16 dev eth1 scope link metric 1003

192.168.2.0/24 dev eth0 proto kernel scope link src 192.168.2.36

[root@compute2 ~]# systemctl status NetworkManager

● NetworkManager.service - Network Manager

Loaded: loaded (/usr/lib/systemd/system/NetworkManager.service; disabled; vendor preset: enabled)

Active: inactive (dead)

Docs: man:NetworkManager(8)

[root@compute2 ~]# cat /etc/sysconfig/network-scripts/ifcfg-eth0

TYPE=Ethernet

BOOTPROTO=none

DEFROUTE=yes

PEERDNS=yes

NAME=eth0

DEVICE=eth0

ONBOOT=yes

IPADDR=192.168.2.36

PREFIX=24

GATEWAY=192.168.2.1

DNS1=8.8.8.8

DNS2=8.8.4.4

[root@compute2 ~]# cat /etc/sysconfig/network-scripts/ifcfg-eth1

TYPE=Ethernet

BOOTPROTO=none

DEFROUTE=no

NAME=eth1

DEVICE=eth1

ONBOOT=yesUpdate all OpenStack nodes (Controller, Network, Compute):

[root@controller ~]# yum update[root@compute1 ~]# yum update[root@compute2 ~]# yum update2. Install OpenStack Pike RDO repository on Controller node

On CentOS 7, the Extras repo, which is enabled by default, provides the RPM that enables the OpenStack repository:

[root@controller ~]# yum install centos-release-openstack-pike3. Update Controller node

Update once again Controller node to fetch and install newest packages from Pike RDO repo:

[root@controller ~]# yum update4. Install Packstack from Pike repo on Controller node

Install Packstack automated installer RPM package:

[root@controller ~]# yum install openstack-packstack5. Generate answer file for packstack on Controller node

[root@controller ~]# packstack --gen-answer-file=/root/answers.txt

Packstack changed given value to required value /root/.ssh/id_rsa.pubPackstack generates answer file with the default values, some of the parameters need to be modified according to our needs.

6. Edit answer file on Controller node

Edit answer file (/root/answers.txt) and modify the below parameters:

CONFIG_HEAT_INSTALL=y

CONFIG_NTP_SERVERS=time1.google.com,time2.google.com

CONFIG_CONTROLLER_HOST=192.168.2.34

CONFIG_COMPUTE_HOSTS=192.168.2.35,192.168.2.36

CONFIG_NETWORK_HOSTS=192.168.2.34

CONFIG_KEYSTONE_ADMIN_USERNAME=admin

CONFIG_KEYSTONE_ADMIN_PW=password

CONFIG_NEUTRON_L3_EXT_BRIDGE=br-ex

CONFIG_NEUTRON_ML2_TYPE_DRIVERS=vlan,flat

CONFIG_NEUTRON_ML2_TENANT_NETWORK_TYPES=vlan

CONFIG_NEUTRON_ML2_VLAN_RANGES=physnet1:1100:1199,physnet0

CONFIG_NEUTRON_OVS_BRIDGE_MAPPINGS=physnet1:br-eth1,physnet0:br-ex

CONFIG_NEUTRON_OVS_BRIDGE_IFACES=br-eth1:eth1,br-ex:eth0

CONFIG_NEUTRON_OVS_BRIDGES_COMPUTE=br-eth1

CONFIG_PROVISION_DEMO=n

CONFIG_PROVISION_TEMPEST=nHere you can find the complete answers.txt file used for our Packstack deployment.

Parameters overview:

CONFIG_HEAT_INSTALL – install Heat Orchestration service along with OpenStack environment

CONFIG_NTP_SERVERS – choose NTP servers needed by message broker (RabbitMQ, Qpid) for inter-node communication

CONFIG_CONTROLLER_HOST – management IP address of Controller node

CONFIG_COMPUTE_HOSTS – management IP addresses of Compute nodes (comma separated list)

CONFIG_NETWORK_HOSTS – management IP address of Network node

CONFIG_KEYSTONE_ADMIN_USERNAME – Keystone admin user name required for API and Horizon dashboard access

CONFIG_KEYSTONE_ADMIN_PW – Keystone admin password required for API and Horizon dashboard access

CONFIG_NEUTRON_L3_EXT_BRIDGE – name of the OVS bridge for external traffic, if you use provider network for external traffic (assign Floating IPs from provider network), you can also enter here provider value

CONFIG_NEUTRON_ML2_TYPE_DRIVERS – network types which will be used in this OpenStack installation

CONFIG_NEUTRON_ML2_TENANT_NETWORK_TYPES – default network type which will be used during network creation inside tenants

CONFIG_NEUTRON_ML2_VLAN_RANGES – VLAN tagging range along with physnet on which the vlan networks will be created (physnet is required for Flat and VLAN networks), if the physnet has no VLAN tagging assigned, networks created on this physnet will have Flat type

CONFIG_NEUTRON_OVS_BRIDGE_MAPPINGS – physnet to bridge mapping (specifying to which OVS bridges the physnets will be attached)

CONFIG_NEUTRON_OVS_BRIDGE_IFACES – OVS bridge to interface mapping (specifying which interfaces will be connected as OVS ports to the particular OVS bridges)

CONFIG_NEUTRON_OVS_BRIDGES_COMPUTE – OVS bridge interface which will be connected to the integration bridge (br-int)

CONFIG_PROVISION_DEMO – example, Demo Tenant creation

CONFIG_PROVISION_TEMPEST – creating the OpenStack Integration Test Suite

7. Install OpenStack Pike using Packstack

Launch packstack automated installation on Controller node:

[root@controller ~]# packstack --answer-file=/root/answers.txt --timeout=600We launch Packstack with extended timeout parameter up to 600 seconds to avoid timeout errors during deployment. Installation takes about 1 hour (depends on hardware), packstack will prompt us for root password for each node (Controller, Network, Compute):

Welcome to the Packstack setup utility

The installation log file is available at: /var/tmp/packstack/20180122-012409-uNDnRi/openstack-setup.log

Installing:

Clean Up [ DONE ]

Discovering ip protocol version [ DONE ]

root@192.168.2.34's password:

root@192.168.2.35's password:

root@192.168.2.36's password:

Setting up ssh keys [ DONE ]

Preparing servers [ DONE ]

Pre installing Puppet and discovering hosts' details [ DONE ]

Preparing pre-install entries [ DONE ]

Installing time synchronization via NTP [ DONE ]

Setting up CACERT [ DONE ]

Preparing AMQP entries [ DONE ]

Preparing MariaDB entries [ DONE ]

Fixing Keystone LDAP config parameters to be undef if empty[ DONE ]

Preparing Keystone entries [ DONE ]

Preparing Glance entries [ DONE ]

Checking if the Cinder server has a cinder-volumes vg[ DONE ]

Preparing Cinder entries [ DONE ]

Preparing Nova API entries [ DONE ]

Creating ssh keys for Nova migration [ DONE ]

Gathering ssh host keys for Nova migration [ DONE ]

Preparing Nova Compute entries [ DONE ]

Preparing Nova Scheduler entries [ DONE ]

Preparing Nova VNC Proxy entries [ DONE ]

Preparing OpenStack Network-related Nova entries [ DONE ]

Preparing Nova Common entries [ DONE ]

Preparing Neutron LBaaS Agent entries [ DONE ]

Preparing Neutron API entries [ DONE ]

Preparing Neutron L3 entries [ DONE ]

Preparing Neutron L2 Agent entries [ DONE ]

Preparing Neutron DHCP Agent entries [ DONE ]

Preparing Neutron Metering Agent entries [ DONE ]

Checking if NetworkManager is enabled and running [ DONE ]

Preparing OpenStack Client entries [ DONE ]

Preparing Horizon entries [ DONE ]

Preparing Swift builder entries [ DONE ]

Preparing Swift proxy entries [ DONE ]

Preparing Swift storage entries [ DONE ]

Preparing Heat entries [ DONE ]

Preparing Heat CloudFormation API entries [ DONE ]

Preparing Gnocchi entries [ DONE ]

Preparing Redis entries [ DONE ]

Preparing Ceilometer entries [ DONE ]

Preparing Aodh entries [ DONE ]

Preparing Puppet manifests [ DONE ]

Copying Puppet modules and manifests [ DONE ]

Applying 192.168.2.34_controller.pp

192.168.2.34_controller.pp: [ DONE ]

Applying 192.168.2.34_network.pp

192.168.2.34_network.pp: [ DONE ]

Applying 192.168.2.35_compute.pp

Applying 192.168.2.36_compute.pp

192.168.2.35_compute.pp: [ DONE ]

192.168.2.36_compute.pp: [ DONE ]

Applying Puppet manifests [ DONE ]

Finalizing [ DONE ]

**** Installation completed successfully ******

Additional information:

* File /root/keystonerc_admin has been created on OpenStack client host 192.168.2.34. To use the command line tools you need to source the file.

* To access the OpenStack Dashboard browse to http://192.168.2.34/dashboard .

Please, find your login credentials stored in the keystonerc_admin in your home directory.

* The installation log file is available at: /var/tmp/packstack/20180122-012409-uNDnRi/openstack-setup.log

* The generated manifests are available at: /var/tmp/packstack/20180122-012409-uNDnRi/manifestsAs we mentioned at the beginning of this tutorial, after successful Packstack deployment no additional configuration is needed, cloud is basically ready to work right now, because answers.txt file did all the hard work. The only thing we need to do is a quick check if the cloud is configured according to our expectations.

8. Verify Packstack installation

Let’s verify in the web browser, if Horizon dashboard is accessible under:

http://192.168.2.34/dashboard

Use credentials from answers.txt file (admin/password) to login to the Horizon dashboard.

Check network configuration on Controller node:

[root@controller ~]# ip a

1: lo: mtu 65536 qdisc noqueue state UNKNOWN qlen 1

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: mtu 1500 qdisc pfifo_fast master ovs-system state UP qlen 1000

link/ether 52:54:00:6b:55:21 brd ff:ff:ff:ff:ff:ff

inet6 fe80::5054:ff:fe6b:5521/64 scope link

valid_lft forever preferred_lft forever

3: eth1: mtu 1500 qdisc pfifo_fast master ovs-system state UP qlen 1000

link/ether 52:54:00:53:af:12 brd ff:ff:ff:ff:ff:ff

inet6 fe80::5054:ff:fe53:af12/64 scope link

valid_lft forever preferred_lft forever

4: ovs-system: mtu 1500 qdisc noop state DOWN qlen 1000

link/ether 4a:fc:b4:c3:d2:b0 brd ff:ff:ff:ff:ff:ff

7: br-eth1: mtu 1500 qdisc noqueue state UNKNOWN qlen 1000

link/ether de:2c:97:1d:a9:48 brd ff:ff:ff:ff:ff:ff

inet6 fe80::dc2c:97ff:fe1d:a948/64 scope link

valid_lft forever preferred_lft forever

8: br-ex: mtu 1500 qdisc noqueue state UNKNOWN qlen 1000

link/ether 3a:d4:6b:78:2c:49 brd ff:ff:ff:ff:ff:ff

inet 192.168.2.34/24 brd 192.168.2.255 scope global br-ex

valid_lft forever preferred_lft forever

inet6 fe80::38d4:6bff:fe78:2c49/64 scope link

valid_lft forever preferred_lft forever

9: br-int: mtu 1500 qdisc noop state DOWN qlen 1000

link/ether 42:2a:47:2c:e3:49 brd ff:ff:ff:ff:ff:ffIP is now assigned to br-ex OVS bridge and eth0 works as a backend interface for this bridge, in fact eth0 is a port connected to br-ex. Additionally br-eth1 OVS bridge was created to handle internal traffic and eth1 interface is connected as a port to br-eth1, acting as it’s backend interface:

[root@controller ~]# ovs-vsctl show

8c007cdc-befd-4715-8d3d-420f2111489b

Manager "ptcp:6640:127.0.0.1"

is_connected: true

Bridge "br-eth1"

Controller "tcp:127.0.0.1:6633"

is_connected: true

fail_mode: secure

Port "br-eth1"

Interface "br-eth1"

type: internal

Port "eth1"

Interface "eth1"

Port "phy-br-eth1"

Interface "phy-br-eth1"

type: patch

options: {peer="int-br-eth1"}

Bridge br-int

Controller "tcp:127.0.0.1:6633"

is_connected: true

fail_mode: secure

Port br-int

Interface br-int

type: internal

Port "int-br-eth1"

Interface "int-br-eth1"

type: patch

options: {peer="phy-br-eth1"}

Port int-br-ex

Interface int-br-ex

type: patch

options: {peer=phy-br-ex}

Bridge br-ex

Controller "tcp:127.0.0.1:6633"

is_connected: true

fail_mode: secure

Port phy-br-ex

Interface phy-br-ex

type: patch

options: {peer=int-br-ex}

Port br-ex

Interface br-ex

type: internal

Port "eth0"

Interface "eth0"

ovs_version: "2.7.3"Check network configuration on Compute1 node:

[root@compute1 ~]# ip a

1: lo: mtu 65536 qdisc noqueue state UNKNOWN qlen 1

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 52:54:00:15:d3:ce brd ff:ff:ff:ff:ff:ff

inet 192.168.2.35/24 brd 192.168.2.255 scope global eth0

valid_lft forever preferred_lft forever

inet6 fe80::5054:ff:fe15:d3ce/64 scope link

valid_lft forever preferred_lft forever

3: eth1: mtu 1500 qdisc pfifo_fast master ovs-system state UP qlen 1000

link/ether 52:54:00:ad:8f:ff brd ff:ff:ff:ff:ff:ff

inet6 fe80::5054:ff:fead:8fff/64 scope link

valid_lft forever preferred_lft forever

6: ovs-system: mtu 1500 qdisc noop state DOWN qlen 1000

link/ether fe:d5:8d:e4:79:b2 brd ff:ff:ff:ff:ff:ff

7: br-eth1: mtu 1500 qdisc noqueue state UNKNOWN qlen 1000

link/ether 52:59:ac:3a:0f:42 brd ff:ff:ff:ff:ff:ff

inet6 fe80::5059:acff:fe3a:f42/64 scope link

valid_lft forever preferred_lft forever

8: br-int: mtu 1500 qdisc noop state DOWN qlen 1000

link/ether b6:e0:0c:c7:be:48 brd ff:ff:ff:ff:ff:ffInterface eth1 is connected as a port to br-eth1, acting as it’s backend interface:

[root@compute1 ~]# ovs-vsctl show

9401693d-07a2-4089-a7fc-837528720853

Manager "ptcp:6640:127.0.0.1"

is_connected: true

Bridge "br-eth1"

Controller "tcp:127.0.0.1:6633"

is_connected: true

fail_mode: secure

Port "br-eth1"

Interface "br-eth1"

type: internal

Port "phy-br-eth1"

Interface "phy-br-eth1"

type: patch

options: {peer="int-br-eth1"}

Port "eth1"

Interface "eth1"

Bridge br-int

Controller "tcp:127.0.0.1:6633"

is_connected: true

fail_mode: secure

Port "int-br-eth1"

Interface "int-br-eth1"

type: patch

options: {peer="phy-br-eth1"}

Port br-int

Interface br-int

type: internal

ovs_version: "2.7.3"And finally check network configuration on Compute2 node:

[root@compute2 ~]# ip a

1: lo: mtu 65536 qdisc noqueue state UNKNOWN qlen 1

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 52:54:00:cd:7d:b8 brd ff:ff:ff:ff:ff:ff

inet 192.168.2.36/24 brd 192.168.2.255 scope global eth0

valid_lft forever preferred_lft forever

inet6 fe80::5054:ff:fecd:7db8/64 scope link

valid_lft forever preferred_lft forever

3: eth1: mtu 1500 qdisc pfifo_fast master ovs-system state UP qlen 1000

link/ether 52:54:00:6a:0a:47 brd ff:ff:ff:ff:ff:ff

inet6 fe80::5054:ff:fe6a:a47/64 scope link

valid_lft forever preferred_lft forever

6: ovs-system: mtu 1500 qdisc noop state DOWN qlen 1000

link/ether ba:4c:1c:1b:27:c7 brd ff:ff:ff:ff:ff:ff

7: br-eth1: mtu 1500 qdisc noqueue state UNKNOWN qlen 1000

link/ether ee:6a:6c:e3:25:46 brd ff:ff:ff:ff:ff:ff

inet6 fe80::ec6a:6cff:fee3:2546/64 scope link

valid_lft forever preferred_lft forever

8: br-int: mtu 1500 qdisc noop state DOWN qlen 1000

link/ether 06:1e:04:84:c3:42 brd ff:ff:ff:ff:ff:ffInterface eth1 is connected as a port to br-eth1, acting as it’s backend interface:

[root@compute2 ~]# ovs-vsctl show

9b7a1faa-d778-43b6-a4ea-62563b567b0d

Manager "ptcp:6640:127.0.0.1"

is_connected: true

Bridge br-int

Controller "tcp:127.0.0.1:6633"

is_connected: true

fail_mode: secure

Port br-int

Interface br-int

type: internal

Port "int-br-eth1"

Interface "int-br-eth1"

type: patch

options: {peer="phy-br-eth1"}

Bridge "br-eth1"

Controller "tcp:127.0.0.1:6633"

is_connected: true

fail_mode: secure

Port "phy-br-eth1"

Interface "phy-br-eth1"

type: patch

options: {peer="int-br-eth1"}

Port "br-eth1"

Interface "br-eth1"

type: internal

Port "eth1"

Interface "eth1"

ovs_version: "2.7.3"During the deployment, Packstack created a file /root/keystonerc_admin with admin credentials to manage our OpenStack environment from CLI. Let’s source the file to import admin credentials as session variables:

[root@controller ~]# source /root/keystonerc_admin

[root@controller ~(keystone_admin)]#In this way, we will avoid being prompt for authentication each time we want to execute the command as admin.

Let’s try to obtain some information about our OpenStack installation.

Verify compute nodes:

[root@controller ~(keystone_admin)]# openstack hypervisor list

+----+---------------------+-----------------+--------------+-------+

| ID | Hypervisor Hostname | Hypervisor Type | Host IP | State |

+----+---------------------+-----------------+--------------+-------+

| 1 | compute2 | QEMU | 192.168.2.36 | up |

| 2 | compute1 | QEMU | 192.168.2.35 | up |

+----+---------------------+-----------------+--------------+-------+Display brief host-service summary:

[root@controller ~(keystone_admin)]# openstack host list

+------------+-------------+----------+

| Host Name | Service | Zone |

+------------+-------------+----------+

| controller | conductor | internal |

| controller | scheduler | internal |

| controller | consoleauth | internal |

| compute2 | compute | nova |

| compute1 | compute | nova |

+------------+-------------+----------+Display neutron agent list on all nodes:

[root@controller ~(keystone_admin)]# openstack network agent list

+--------------------------------------+--------------------+------------+-------------------+-------+-------+---------------------------+

| ID | Agent Type | Host | Availability Zone | Alive | State | Binary |

+--------------------------------------+--------------------+------------+-------------------+-------+-------+---------------------------+

| 12780584-0319-45b1-988c-023fe1a8c16d | Open vSwitch agent | compute2 | None | ':-)' | UP | neutron-openvswitch-agent |

| 5a405415-d490-4900-bb2a-5845e666e75e | Open vSwitch agent | compute1 | None | ':-)' | UP | neutron-openvswitch-agent |

| 9e37c598-232c-4356-8a2c-5f9690f499b8 | DHCP agent | controller | nova | ':-)' | UP | neutron-dhcp-agent |

| c42933aa-bffd-48d4-829a-b8afa988c4af | Open vSwitch agent | controller | None | ':-)' | UP | neutron-openvswitch-agent |

| cbd1044f-67e8-4b69-8a17-127bbe8e6a4b | L3 agent | controller | nova | ':-)' | UP | neutron-l3-agent |

| d73ced30-2c9d-431a-8ec5-db9287fea2f8 | Metadata agent | controller | None | ':-)' | UP | neutron-metadata-agent |

| f4184aae-8f36-45c0-ba13-da4eddfa1d82 | Metering agent | controller | None | ':-)' | UP | neutron-metering-agent |

+--------------------------------------+--------------------+------------+-------------------+-------+-------+---------------------------+Display installed services:

[root@controller ~(keystone_admin)]# openstack service list

+----------------------------------+------------+----------------+

| ID | Name | Type |

+----------------------------------+------------+----------------+

| 185c2397ccfd4b1ab2472eee8fac1104 | gnocchi | metric |

| 218326bd1249444da11afb93c89761ce | nova | compute |

| 266b6275884945d39dbc08cb3297eaa2 | ceilometer | metering |

| 4f0ebe86b6284fb689387bbc3212f9f5 | cinder | volume |

| 59392edd44984143bc47a89e111beb0a | heat | orchestration |

| 6e2c0431b52c417f939dc71fd606d847 | cinderv3 | volumev3 |

| 76f57a7d34d649d7a9652e0a2475d96a | cinderv2 | volumev2 |

| 7702f8e926cf4227857ddca46b3b328f | swift | object-store |

| aef9ec430ac2403f88477afed1880697 | aodh | alarming |

| b0d402a9ed0c4c54ae9d949e32e8527f | neutron | network |

| b3d1ed21ca384878b5821074c4e0fafe | heat-cfn | cloudformation |

| bee473062bc448118d8975e46af155df | glance | image |

| cf33ec475eaf43bfa7a4f7e3a80615aa | keystone | identity |

| fe332b47264f45d5af015f40b582ffec | placement | placement |

+----------------------------------+------------+----------------+And that’s it, our OpenStack cloud is now ready to use.

Hi Grzegorz Juszczak, is there any document with VXLAN and extnet configuration with 2 nodes or 3 nodes openstack pike or any latest version installation?

Hi,

here you can find how to create public network and a router attached to that network:

http://www.tuxfixer.com/create-tenant-in-openstack-newton-using-command-line-interface/

Grzegorz Juszczak this is super …

Please share latest version of OpenStack 2 node or 3 node installation with VXLAN

Hi ..Is there any link from where i can see tenant networking using vlans. Do we require a router ..

I want to launch a couple of VMs which can talk to each other using internal vlan ..at the same time all must be able to reach or ssh outside the openstack..

Thanks

Nitin

Hi Nitin

If VLANs are configured correctly, then it doesn’t matter whether you use external VLANs or internal VLANs inside tenant, VMs should see each other. For external VLANs they will communicate via an external switch (outside OpenStack). If you are using internal networks which are connected to qrouter and then assigned FloatingIPs, VMs should ping each other using internal network inside the tenant.

To see tenant network setup, just go to Network -> Network Topology tab.