OpenStack can use diffirent backend technologies for Cinder Volumes Service to create volumes for Instances running in cloud. The default and most common backend used for Cinder Service is LVM (Logical Volume Manager), but it has one basic disadventage – it’s slow and overloads the server which serves LVM (usually Controller), especially during volume operations like volume deletion. OpenStack supports other Cinder backend technologies, like GlusterFS which is more sophisticated and reliable solution, provides redundancy and does not occupy Controller’s resources, because it usually runs on separate dedicated servers.

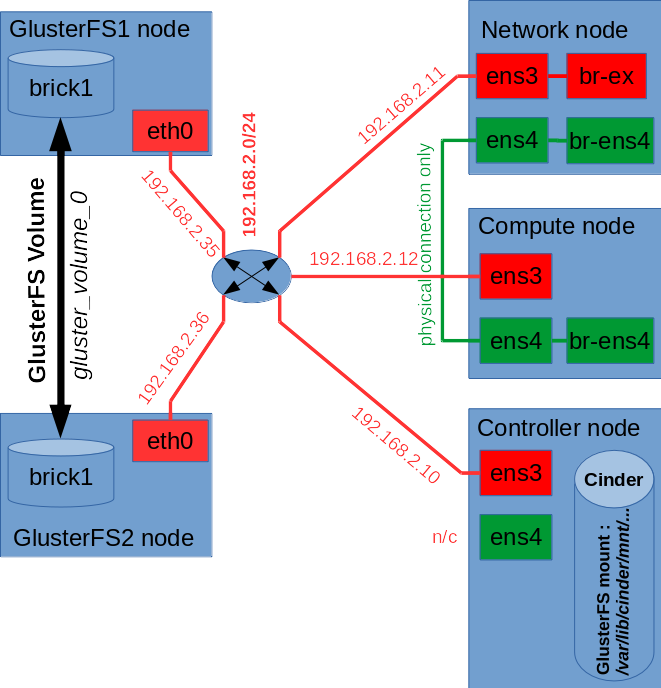

In this tutorial we are going to deploy VLAN based OpenStack Mitaka on three CentOS 7 nodes (Controller, Network, Compute) using Packstack installer script and integrate it with already existing GlusterFS redundant storage based on two Gluster Peers.

Environment used:

public network (Floating IP network): 192.168.2.0/24

internal network (on each node): no IP space, physical connection only (interface: ens4)

controller public IP: 192.168.2.10 (interface: ens3)

network public IP: 192.168.2.11 (interface: ens3)

compute public IP: 192.168.2.12 (interface: ens3)

glusterfs1 public IP: 192.168.2.35 (interface: eth0)

glusterfs2 public IP: 192.168.2.36 (interface: eth0)

OS version (each node): CentOS x86_64 release 7.2.1511 (Core)

Note: in this tutorial we assume, that you already have a working GlusterFS filesystem installation, which is a prerequisite for this tutorial. Find out How to Install and Configure GlusterFS Storage on CentOS 7 Servers.

Steps:

1. Prerequsites

In order to be able to install OpenStack using Packstack we need to meet the following requirements on all nodes:

1.1 Update system on all nodes (Controller, Network, Compute)

[root@controller ~]# yum update[root@network ~]# yum update[root@compute ~]# yum update1.2 Stop and disable NetworkManager on all nodes (Controller, Network, Compute)

[root@controller ~]# systemctl stop NetworkManager

[root@controller ~]# systemctl disable NetworkManager[root@network ~]# systemctl stop NetworkManager

[root@network ~]# systemctl disable NetworkManager[root@compute ~]# systemctl stop NetworkManager

[root@compute ~]# systemctl disable NetworkManager1.3 Verify network interfaces on all nodes before Openstack installation

Make sure the public network interfaces (ens3) on all nodes are configured properly using static IP addresses to avoid further problems.

Below we present our network configuration right before OpenStack installation:

Controller node:

[root@controller ~]# ip a

1: lo: mtu 65536 qdisc noqueue state UNKNOWN

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens3: mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 52:54:00:2b:d5:fb brd ff:ff:ff:ff:ff:ff

inet 192.168.2.10/24 brd 192.168.2.255 scope global ens3

valid_lft forever preferred_lft forever

inet6 fe80::5054:ff:fe2b:d5fb/64 scope link

valid_lft forever preferred_lft forever

3: ens4: mtu 1500 qdisc noop state DOWN qlen 1000

link/ether 52:54:00:46:a1:95 brd ff:ff:ff:ff:ff:ff

[root@controller ~]# cat /etc/sysconfig/network-scripts/ifcfg-ens3

TYPE="Ethernet"

BOOTPROTO="static"

DEFROUTE="yes"

PEERDNS="no"

NAME="ens3"

UUID="650ea528-3b38-4973-9648-d577bfb53ecb"

DEVICE="ens3"

ONBOOT="yes"

IPADDR="192.168.2.10"

PREFIX="24"

GATEWAY="192.168.2.1"

[root@controller ~]# cat /etc/resolv.conf

# Generated by NetworkManager

nameserver 8.8.8.8

nameserver 8.8.4.4

[root@controller ~]# ip route show

default via 192.168.2.1 dev ens3

169.254.0.0/16 dev ens3 scope link metric 1002

192.168.2.0/24 dev ens3 proto kernel scope link src 192.168.2.10 Network node:

[root@network ~]# ip a

1: lo: mtu 65536 qdisc noqueue state UNKNOWN

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens3: mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 52:54:00:8d:87:98 brd ff:ff:ff:ff:ff:ff

inet 192.168.2.11/24 brd 192.168.2.255 scope global ens3

valid_lft forever preferred_lft forever

inet6 fe80::5054:ff:fe8d:8798/64 scope link

valid_lft forever preferred_lft forever

3: ens4: mtu 1500 qdisc noop state DOWN qlen 1000

link/ether 52:54:00:b6:db:29 brd ff:ff:ff:ff:ff:ff

[root@network ~]# cat /etc/sysconfig/network-scripts/ifcfg-ens3

TYPE="Ethernet"

BOOTPROTO="static"

DEFROUTE="yes"

PEERDNS="no"

NAME="ens3"

UUID="650ea528-3b38-4973-9648-d577bfb53ecb"

DEVICE="ens3"

ONBOOT="yes"

IPADDR="192.168.2.11"

PREFIX="24"

GATEWAY="192.168.2.1"

[root@network ~]# cat /etc/resolv.conf

# Generated by NetworkManager

nameserver 8.8.8.8

nameserver 8.8.4.4

[root@network ~]# ip route show

default via 192.168.2.1 dev ens3

169.254.0.0/16 dev ens3 scope link metric 1002

192.168.2.0/24 dev ens3 proto kernel scope link src 192.168.2.11 Compute node:

[root@compute ~]# ip a

1: lo: mtu 65536 qdisc noqueue state UNKNOWN

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens3: mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 52:54:00:09:04:e7 brd ff:ff:ff:ff:ff:ff

inet 192.168.2.12/24 brd 192.168.2.255 scope global ens3

valid_lft forever preferred_lft forever

inet6 fe80::5054:ff:fe09:4e7/64 scope link

valid_lft forever preferred_lft forever

3: ens4: mtu 1500 qdisc noop state DOWN qlen 1000

link/ether 52:54:00:ad:a1:fa brd ff:ff:ff:ff:ff:ff

[root@compute ~]# cat /etc/sysconfig/network-scripts/ifcfg-ens3

TYPE="Ethernet"

BOOTPROTO="static"

DEFROUTE="yes"

PEERDNS="no"

NAME="ens3"

UUID="650ea528-3b38-4973-9648-d577bfb53ecb"

DEVICE="ens3"

ONBOOT="yes"

IPADDR="192.168.2.12"

PREFIX="24"

GATEWAY="192.168.2.1"

[root@compute ~]# cat /etc/resolv.conf

# Generated by NetworkManager

nameserver 8.8.8.8

nameserver 8.8.4.4

[root@compute ~]# ip route show

default via 192.168.2.1 dev ens3

169.254.0.0/16 dev ens3 scope link metric 1002

192.168.2.0/24 dev ens3 proto kernel scope link src 192.168.2.12 2. Install RDO OpenStack Mitaka repository (Controller only)

[root@controller ~]# yum install https://repos.fedorapeople.org/repos/openstack/openstack-mitaka/rdo-release-mitaka-5.noarch.rpm3. Install Packstack automated installer (Controller only)

[root@controller ~]# yum install openstack-packstack4. Generate answer file for Packstack automated installation (Controller only)

[root@controller ~]# packstack --gen-answer-file=/root/answers.txt

Packstack changed given value to required value /root/.ssh/id_rsa.pub5. Edit answer file (Controller only)

Edit answer file (/root/answers.txt) and modify it’s parameters to look like below:

[root@controller ~]# vim /root/answers.txtCONFIG_NTP_SERVERS=0.pool.ntp.org,1.pool.ntp.org,2.pool.ntp.org

CONFIG_NAGIOS_INSTALL=n

CONFIG_CONTROLLER_HOST=192.168.2.10

CONFIG_COMPUTE_HOSTS=192.168.2.12

CONFIG_NETWORK_HOSTS=192.168.2.11

CONFIG_USE_EPEL=y

CONFIG_RH_OPTIONAL=n

CONFIG_KEYSTONE_ADMIN_PW=password

CONFIG_CINDER_BACKEND=gluster

CONFIG_CINDER_VOLUMES_SIZE=10G

CONFIG_CINDER_GLUSTER_MOUNTS=192.168.2.35:/gluster_volume_0

CONFIG_NEUTRON_ML2_TYPE_DRIVERS=vlan

CONFIG_NEUTRON_ML2_TENANT_NETWORK_TYPES=vlan

CONFIG_NEUTRON_ML2_VLAN_RANGES=physnet1:1000:2000

CONFIG_NEUTRON_OVS_BRIDGE_MAPPINGS=physnet1:br-ens4

CONFIG_NEUTRON_OVS_BRIDGE_IFACES=br-ens4:ens4

CONFIG_NEUTRON_OVS_BRIDGES_COMPUTE=br-ens4

CONFIG_PROVISION_DEMO=nHere we attach answers.txt file used during our VLAN based 3 node Openstack Mitaka installation with GlusterFS Storage Backend.

Note: we left the rest of the parameters with their default values, as they are not critical for the installation to succeed, but of course feel free to modify them according to your needs.

6. Install OpenStack Mitaka using packstack (Controller only)

Launch packstack automated installation:

[root@controller ~]# packstack --answer-file=/root/answers.txt --timeout=600Installation takes about 1,5-2 hours (depends on hardware), packstack will prompt us for root password for each node (Controller, Network, Compute) in order to be able to deploy OpenStack services on all nodes using Puppet script:

Welcome to the Packstack setup utility

The installation log file is available at: /var/tmp/packstack/20160811-001455-GRBlYN/openstack-setup.log

Installing:

Clean Up [ DONE ]

Discovering ip protocol version [ DONE ]

root@192.168.2.12's password:

root@192.168.2.10's password:

root@192.168.2.11's password:

Setting up ssh keys [ DONE ]

Preparing servers [ DONE ]

Pre installing Puppet and discovering hosts' details [ DONE ]

Adding pre install manifest entries [ DONE ]

Installing time synchronization via NTP [ DONE ]

Setting up CACERT [ DONE ]

Adding AMQP manifest entries [ DONE ]

Adding MariaDB manifest entries [ DONE ]

Adding Apache manifest entries [ DONE ]

Fixing Keystone LDAP config parameters to be undef if empty[ DONE ]

Adding Keystone manifest entries [ DONE ]

Adding Glance Keystone manifest entries [ DONE ]

Adding Glance manifest entries [ DONE ]

Adding Cinder Keystone manifest entries [ DONE ]

Adding Cinder manifest entries [ DONE ]

Adding Nova API manifest entries [ DONE ]

Adding Nova Keystone manifest entries [ DONE ]

Adding Nova Cert manifest entries [ DONE ]

Adding Nova Conductor manifest entries [ DONE ]

Creating ssh keys for Nova migration [ DONE ]

Gathering ssh host keys for Nova migration [ DONE ]

Adding Nova Compute manifest entries [ DONE ]

Adding Nova Scheduler manifest entries [ DONE ]

Adding Nova VNC Proxy manifest entries [ DONE ]

Adding OpenStack Network-related Nova manifest entries[ DONE ]

Adding Nova Common manifest entries [ DONE ]

Adding Neutron VPNaaS Agent manifest entries [ DONE ]

Adding Neutron FWaaS Agent manifest entries [ DONE ]

Adding Neutron LBaaS Agent manifest entries [ DONE ]

Adding Neutron API manifest entries [ DONE ]

Adding Neutron Keystone manifest entries [ DONE ]

Adding Neutron L3 manifest entries [ DONE ]

Adding Neutron L2 Agent manifest entries [ DONE ]

Adding Neutron DHCP Agent manifest entries [ DONE ]

Adding Neutron Metering Agent manifest entries [ DONE ]

Adding Neutron Metadata Agent manifest entries [ DONE ]

Adding Neutron SR-IOV Switch Agent manifest entries [ DONE ]

Checking if NetworkManager is enabled and running [ DONE ]

Adding OpenStack Client manifest entries [ DONE ]

Adding Horizon manifest entries [ DONE ]

Adding Swift Keystone manifest entries [ DONE ]

Adding Swift builder manifest entries [ DONE ]

Adding Swift proxy manifest entries [ DONE ]

Adding Swift storage manifest entries [ DONE ]

Adding Swift common manifest entries [ DONE ]

Adding Gnocchi manifest entries [ DONE ]

Adding Gnocchi Keystone manifest entries [ DONE ]

Adding MongoDB manifest entries [ DONE ]

Adding Redis manifest entries [ DONE ]

Adding Ceilometer manifest entries [ DONE ]

Adding Ceilometer Keystone manifest entries [ DONE ]

Adding Aodh manifest entries [ DONE ]

Adding Aodh Keystone manifest entries [ DONE ]

Copying Puppet modules and manifests [ DONE ]

Applying 192.168.2.12_prescript.pp

Applying 192.168.2.10_prescript.pp

Applying 192.168.2.11_prescript.pp

192.168.2.12_prescript.pp: [ DONE ]

192.168.2.10_prescript.pp: [ DONE ]

192.168.2.11_prescript.pp: [ DONE ]

Applying 192.168.2.12_chrony.pp

Applying 192.168.2.10_chrony.pp

Applying 192.168.2.11_chrony.pp

192.168.2.11_chrony.pp: [ DONE ]

192.168.2.12_chrony.pp: [ DONE ]

192.168.2.10_chrony.pp: [ DONE ]

Applying 192.168.2.10_amqp.pp

Applying 192.168.2.10_mariadb.pp

192.168.2.10_amqp.pp: [ DONE ]

192.168.2.10_mariadb.pp: [ DONE ]

Applying 192.168.2.10_apache.pp

192.168.2.10_apache.pp: [ DONE ]

Applying 192.168.2.10_keystone.pp

Applying 192.168.2.10_glance.pp

Applying 192.168.2.10_cinder.pp

192.168.2.10_keystone.pp: [ DONE ]

192.168.2.10_cinder.pp: [ DONE ]

192.168.2.10_glance.pp: [ DONE ]

Applying 192.168.2.10_api_nova.pp

192.168.2.10_api_nova.pp: [ DONE ]

Applying 192.168.2.10_nova.pp

Applying 192.168.2.12_nova.pp

192.168.2.10_nova.pp: [ DONE ]

192.168.2.12_nova.pp: [ DONE ]

Applying 192.168.2.12_neutron.pp

Applying 192.168.2.10_neutron.pp

Applying 192.168.2.11_neutron.pp

192.168.2.12_neutron.pp: [ DONE ]

192.168.2.10_neutron.pp: [ DONE ]

192.168.2.11_neutron.pp: [ DONE ]

Applying 192.168.2.10_osclient.pp

Applying 192.168.2.10_horizon.pp

192.168.2.10_osclient.pp: [ DONE ]

192.168.2.10_horizon.pp: [ DONE ]

Applying 192.168.2.10_ring_swift.pp

192.168.2.10_ring_swift.pp: [ DONE ]

Applying 192.168.2.10_swift.pp

192.168.2.10_swift.pp: [ DONE ]

Applying 192.168.2.10_gnocchi.pp

192.168.2.10_gnocchi.pp: [ DONE ]

Applying 192.168.2.10_mongodb.pp

Applying 192.168.2.10_redis.pp

192.168.2.10_mongodb.pp: [ DONE ]

192.168.2.10_redis.pp: [ DONE ]

Applying 192.168.2.10_ceilometer.pp

192.168.2.10_ceilometer.pp: [ DONE ]

Applying 192.168.2.10_aodh.pp

192.168.2.10_aodh.pp: [ DONE ]

Applying Puppet manifests [ DONE ]

Finalizing [ DONE ]

**** Installation completed successfully ******

Additional information:

* File /root/keystonerc_admin has been created on OpenStack client host 192.168.2.10. To use the command line tools you need to source the file.

* To access the OpenStack Dashboard browse to http://192.168.2.10/dashboard .

Please, find your login credentials stored in the keystonerc_admin in your home directory.

* The installation log file is available at: /var/tmp/packstack/20160811-001455-GRBlYN/openstack-setup.log

* The generated manifests are available at: /var/tmp/packstack/20160811-001455-GRBlYN/manifests7. Verify OpenStack Mitaka installation

Login to the Horizon (OpenStack Dashboard), type the following in your web browser:

http://192.168.2.10/dashboardYou should see Dashboard Login screen, type login and password (in our case: admin/password):

Services on Controller node right after OpenStack Mitaka installation:

[root@controller ~]# systemctl list-unit-files | grep openstack

openstack-aodh-api.service disabled

openstack-aodh-evaluator.service enabled

openstack-aodh-listener.service enabled

openstack-aodh-notifier.service enabled

openstack-ceilometer-api.service disabled

openstack-ceilometer-central.service enabled

openstack-ceilometer-collector.service enabled

openstack-ceilometer-notification.service enabled

openstack-ceilometer-polling.service disabled

openstack-cinder-api.service enabled

openstack-cinder-backup.service enabled

openstack-cinder-scheduler.service enabled

openstack-cinder-volume.service enabled

openstack-glance-api.service enabled

openstack-glance-glare.service disabled

openstack-glance-registry.service enabled

openstack-glance-scrubber.service disabled

openstack-gnocchi-api.service disabled

openstack-gnocchi-metricd.service enabled

openstack-gnocchi-statsd.service enabled

openstack-keystone.service disabled

openstack-nova-api.service enabled

openstack-nova-cert.service enabled

openstack-nova-conductor.service enabled

openstack-nova-console.service disabled

openstack-nova-consoleauth.service enabled

openstack-nova-metadata-api.service disabled

openstack-nova-novncproxy.service enabled

openstack-nova-os-compute-api.service disabled

openstack-nova-scheduler.service enabled

openstack-nova-xvpvncproxy.service disabled

openstack-swift-account-auditor.service enabled

openstack-swift-account-auditor@.service disabled

openstack-swift-account-reaper.service enabled

openstack-swift-account-reaper@.service disabled

openstack-swift-account-replicator.service enabled

openstack-swift-account-replicator@.service disabled

openstack-swift-account.service enabled

openstack-swift-account@.service disabled

openstack-swift-container-auditor.service enabled

openstack-swift-container-auditor@.service disabled

openstack-swift-container-reconciler.service disabled

openstack-swift-container-replicator.service enabled

openstack-swift-container-replicator@.service disabled

openstack-swift-container-updater.service enabled

openstack-swift-container-updater@.service disabled

openstack-swift-container.service enabled

openstack-swift-container@.service disabled

openstack-swift-object-auditor.service enabled

openstack-swift-object-auditor@.service disabled

openstack-swift-object-expirer.service enabled

openstack-swift-object-replicator.service enabled

openstack-swift-object-replicator@.service disabled

openstack-swift-object-updater.service enabled

openstack-swift-object-updater@.service disabled

openstack-swift-object.service enabled

openstack-swift-object@.service disabled

openstack-swift-proxy.service enabled

[root@controller ~]# systemctl list-unit-files | grep neutron

neutron-dhcp-agent.service disabled

neutron-l3-agent.service disabled

neutron-linuxbridge-cleanup.service disabled

neutron-metadata-agent.service disabled

neutron-netns-cleanup.service disabled

neutron-ovs-cleanup.service disabled

neutron-server.service enabled

[root@controller ~]# systemctl list-unit-files | grep ovs

neutron-ovs-cleanup.service disabledServices on Network node right after OpenStack Mitaka installation:

[root@network ~]# systemctl list-unit-files | grep openstack

[root@network ~]# systemctl list-unit-files | grep neutron

neutron-dhcp-agent.service enabled

neutron-l3-agent.service enabled

neutron-linuxbridge-cleanup.service disabled

neutron-metadata-agent.service enabled

neutron-metering-agent.service enabled

neutron-netns-cleanup.service disabled

neutron-openvswitch-agent.service enabled

neutron-ovs-cleanup.service enabled

neutron-server.service disabled

[root@network ~]# systemctl list-unit-files | grep ovs

neutron-ovs-cleanup.service enabled Services on Compute node right after OpenStack Mitaka installation:

[root@compute ~]# systemctl list-unit-files | grep openstack

openstack-ceilometer-compute.service enabled

openstack-ceilometer-polling.service disabled

openstack-nova-compute.service enabled

[root@compute ~]# systemctl list-unit-files | grep neutron

neutron-dhcp-agent.service disabled

neutron-l3-agent.service disabled

neutron-linuxbridge-cleanup.service disabled

neutron-metadata-agent.service disabled

neutron-netns-cleanup.service disabled

neutron-openvswitch-agent.service enabled

neutron-ovs-cleanup.service enabled

neutron-server.service disabled

[root@compute ~]# systemctl list-unit-files | grep ovs

neutron-ovs-cleanup.service enabled Network interfaces onController node right after OpenStack Mitaka installation:

[root@controller ~]# ip a

1: lo: mtu 65536 qdisc noqueue state UNKNOWN

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens3: mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 52:54:00:2b:d5:fb brd ff:ff:ff:ff:ff:ff

inet 192.168.2.10/24 brd 192.168.2.255 scope global ens3

valid_lft forever preferred_lft forever

inet6 fe80::5054:ff:fe2b:d5fb/64 scope link

valid_lft forever preferred_lft forever

3: ens4: mtu 1500 qdisc noop state DOWN qlen 1000

link/ether 52:54:00:46:a1:95 brd ff:ff:ff:ff:ff:ffNetwork interfaces onNetwork node right after OpenStack Mitaka installation:

[root@network ~]# ip a

1: lo: mtu 65536 qdisc noqueue state UNKNOWN

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens3: mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 52:54:00:8d:87:98 brd ff:ff:ff:ff:ff:ff

inet 192.168.2.11/24 brd 192.168.2.255 scope global ens3

valid_lft forever preferred_lft forever

inet6 fe80::5054:ff:fe8d:8798/64 scope link

valid_lft forever preferred_lft forever

3: ens4: mtu 1500 qdisc pfifo_fast master ovs-system state UP qlen 1000

link/ether 52:54:00:b6:db:29 brd ff:ff:ff:ff:ff:ff

inet6 fe80::5054:ff:feb6:db29/64 scope link

valid_lft forever preferred_lft forever

4: ovs-system: mtu 1500 qdisc noop state DOWN

link/ether ae:29:85:96:49:4f brd ff:ff:ff:ff:ff:ff

5: br-ens4: mtu 1500 qdisc noop state DOWN

link/ether ea:55:f1:11:3f:46 brd ff:ff:ff:ff:ff:ff

6: br-ex: mtu 1500 qdisc noop state DOWN

link/ether 02:be:14:98:b0:40 brd ff:ff:ff:ff:ff:ff

7: br-int: mtu 1500 qdisc noop state DOWN

link/ether 06:c2:c8:08:cc:4c brd ff:ff:ff:ff:ff:ffNetwork interfaces onCompute node right after OpenStack Mitaka installation:

[root@compute ~]# ip a

1: lo: mtu 65536 qdisc noqueue state UNKNOWN

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens3: mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 52:54:00:09:04:e7 brd ff:ff:ff:ff:ff:ff

inet 192.168.2.12/24 brd 192.168.2.255 scope global ens3

valid_lft forever preferred_lft forever

inet6 fe80::5054:ff:fe09:4e7/64 scope link

valid_lft forever preferred_lft forever

3: ens4: mtu 1500 qdisc pfifo_fast master ovs-system state UP qlen 1000

link/ether 52:54:00:ad:a1:fa brd ff:ff:ff:ff:ff:ff

inet6 fe80::5054:ff:fead:a1fa/64 scope link

valid_lft forever preferred_lft forever

4: ovs-system: mtu 1500 qdisc noop state DOWN

link/ether 8a:8a:de:19:d8:3b brd ff:ff:ff:ff:ff:ff

5: br-int: mtu 1500 qdisc noop state DOWN

link/ether 4a:54:44:a4:f2:40 brd ff:ff:ff:ff:ff:ff

6: br-ens4: mtu 1500 qdisc noop state DOWN

link/ether 8a:27:66:b8:2c:40 brd ff:ff:ff:ff:ff:ffOVS configuration on Controller node right after OpenStack Mitaka installation:

[root@controller ~]# ovs-vsctl show

-bash: ovs-vsctl: command not foundNote: no OVS related services were installed on Controller node (that’s why command not found)

OVS configuration on Network node right after OpenStack Mitaka installation:

[root@network ~]# ovs-vsctl show

581bbce5-b7cc-4a7f-ad29-a655de6c0963

Bridge br-int

fail_mode: secure

Port "int-br-ens4"

Interface "int-br-ens4"

type: patch

options: {peer="phy-br-ens4"}

Port br-int

Interface br-int

type: internal

Bridge "br-ens4"

Port "ens4"

Interface "ens4"

Port "br-ens4"

Interface "br-ens4"

type: internal

Port "phy-br-ens4"

Interface "phy-br-ens4"

type: patch

options: {peer="int-br-ens4"}

Bridge br-ex

Port br-ex

Interface br-ex

type: internal

ovs_version: "2.5.0"OVS configuration on Compute node right after OpenStack Mitaka installation:

[root@compute ~]# ovs-vsctl show

cf0f2a0c-557f-4a2a-bbae-8e61c3f1ee6b

Bridge br-int

fail_mode: secure

Port br-int

Interface br-int

type: internal

Port "int-br-ens4"

Interface "int-br-ens4"

type: patch

options: {peer="phy-br-ens4"}

Bridge "br-ens4"

Port "ens4"

Interface "ens4"

Port "br-ens4"

Interface "br-ens4"

type: internal

Port "phy-br-ens4"

Interface "phy-br-ens4"

type: patch

options: {peer="int-br-ens4"}

ovs_version: "2.5.0"Verify GlusterFS Storage mount point (Controller node only):

[root@controller ~]# df -hT

Filesystem Type Size Used Avail Use% Mounted on

/dev/vda1 xfs 48G 3.1G 45G 7% /

devtmpfs devtmpfs 1.9G 0 1.9G 0% /dev

tmpfs tmpfs 1.9G 52K 1.9G 1% /dev/shm

tmpfs tmpfs 1.9G 8.4M 1.9G 1% /run

tmpfs tmpfs 1.9G 0 1.9G 0% /sys/fs/cgroup

/dev/loop0 ext4 1.9G 6.1M 1.7G 1% /srv/node/swiftloopback

tmpfs tmpfs 380M 0 380M 0% /run/user/0

192.168.2.35:/gluster_volume_0 fuse.glusterfs 10G 33M 10G 1% /var/lib/cinder/mnt/50e4195bf74921263ed45cc595ce60d7Note: although our GlusterFS Storage consists of two redundant GlusterFS Peers only one Peer is mounted on Controller node at a time.

7. Configure network interfaces and Open vSwitch (OVS)

Note: we will not perform any modifications on Controller node interfaces, since Controller is not running any network related OpenStack services, all networking tasks are now served by Network node.

Backup / create network interface files (Network node only):

[root@network ~]# cp /etc/sysconfig/network-scripts/ifcfg-ens3 /root/ifcfg-ens3.backup

[root@network ~]# cp /etc/sysconfig/network-scripts/ifcfg-ens3 /etc/sysconfig/network-scripts/ifcfg-br-exModify ifcfg-ens3 file (Network node only) to look like below:

DEVICE="ens3"

ONBOOT="yes"Modify ifcfg-br-ex file (Network node only) to look like below:

TYPE="Ethernet"

BOOTPROTO="static"

DEFROUTE="yes"

PEERDNS="no"

NAME="br-ex"

UUID="650ea528-3b38-4973-9648-d577bfb53ecb"

DEVICE="br-ex"

ONBOOT="yes"

IPADDR="192.168.2.11"

PREFIX="24"

GATEWAY="192.168.2.1"Connect ens3 interface as a port to br-ex bridge (Network node only):

Note: below command will trigger network restart, so you will lose network connection for a while! The network connection should be brought up again, if you modified ifcfg-ens3 and ifcfg-br-ex files correctly.

[root@network ~]# ovs-vsctl add-port br-ex ens3; systemctl restart networkVerify new network configuration (Network node only) after modifications (public IP should be now assigned to br-ex interface):

[root@network ~]# ip a

1: lo: mtu 65536 qdisc noqueue state UNKNOWN

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens3: mtu 1500 qdisc pfifo_fast master ovs-system state UP qlen 1000

link/ether 52:54:00:8d:87:98 brd ff:ff:ff:ff:ff:ff

inet6 fe80::5054:ff:fe8d:8798/64 scope link

valid_lft forever preferred_lft forever

3: ens4: mtu 1500 qdisc pfifo_fast master ovs-system state UP qlen 1000

link/ether 52:54:00:b6:db:29 brd ff:ff:ff:ff:ff:ff

inet6 fe80::5054:ff:feb6:db29/64 scope link

valid_lft forever preferred_lft forever

4: ovs-system: mtu 1500 qdisc noop state DOWN

link/ether 7a:72:a4:c0:48:25 brd ff:ff:ff:ff:ff:ff

5: br-ens4: mtu 1500 qdisc noop state DOWN

link/ether ea:55:f1:11:3f:46 brd ff:ff:ff:ff:ff:ff

6: br-ex: mtu 1500 qdisc noqueue state UNKNOWN

link/ether 02:be:14:98:b0:40 brd ff:ff:ff:ff:ff:ff

inet 192.168.2.11/24 brd 192.168.2.255 scope global br-ex

valid_lft forever preferred_lft forever

inet6 fe80::be:14ff:fe98:b040/64 scope link

valid_lft forever preferred_lft forever

7: br-int: mtu 1500 qdisc noop state DOWN

link/ether 06:c2:c8:08:cc:4c brd ff:ff:ff:ff:ff:ffVerify OVS configuration (Network node only). Now port ens3 should be assigned to br-ex:

[root@network ~]# ovs-vsctl show

581bbce5-b7cc-4a7f-ad29-a655de6c0963

Bridge br-int

fail_mode: secure

Port "int-br-ens4"

Interface "int-br-ens4"

type: patch

options: {peer="phy-br-ens4"}

Port br-int

Interface br-int

type: internal

Bridge "br-ens4"

Port "br-ens4"

Interface "br-ens4"

type: internal

Port "ens4"

Interface "ens4"

Port "phy-br-ens4"

Interface "phy-br-ens4"

type: patch

options: {peer="int-br-ens4"}

Bridge br-ex

Port br-ex

Interface br-ex

type: internal

Port "ens3"

Interface "ens3"

ovs_version: "2.5.0"Note: taking to consideration that we already configured internal network interfaces (br-ens4, ens4) in answer file (/root/answers.txt) by means of the below parameters:

CONFIG_NEUTRON_OVS_BRIDGE_MAPPINGS=physnet1:br-ens4

CONFIG_NEUTRON_OVS_BRIDGE_IFACES=br-ens4:ens4

CONFIG_NEUTRON_OVS_BRIDGES_COMPUTE=br-ens4…no manual configuration for internal network interfaces (br-ens4, ens4) is required any more on OpenStack nodes.

8. Verify OpenStack services

After Packstack based Openstack installation a file /root/keystonerc_admin (which contains admin credentials and other authentication parameters) is created on Controller node. We need to source this file to import OpenStack admin credentials into Linux system variables:

[root@controller ~]# source /root/keystonerc_admin

[root@controller ~(keystone_admin)]#Note: after sourcing the file our prompt should now include keystone_admin phrase

Check OpenStack status:

[root@controller ~(keystone_admin)]# openstack-status

== Nova services ==

openstack-nova-api: active

openstack-nova-compute: inactive (disabled on boot)

openstack-nova-network: inactive (disabled on boot)

openstack-nova-scheduler: active

openstack-nova-cert: active

openstack-nova-conductor: active

openstack-nova-console: inactive (disabled on boot)

openstack-nova-consoleauth: active

openstack-nova-xvpvncproxy: inactive (disabled on boot)

== Glance services ==

openstack-glance-api: active

openstack-glance-registry: active

== Keystone service ==

openstack-keystone: inactive (disabled on boot)

== Horizon service ==

openstack-dashboard: active

== neutron services ==

neutron-server: active

neutron-dhcp-agent: inactive (disabled on boot)

neutron-l3-agent: inactive (disabled on boot)

neutron-metadata-agent: inactive (disabled on boot)

== Swift services ==

openstack-swift-proxy: active

openstack-swift-account: active

openstack-swift-container: active

openstack-swift-object: active

== Cinder services ==

openstack-cinder-api: active

openstack-cinder-scheduler: active

openstack-cinder-volume: active

openstack-cinder-backup: active

== Ceilometer services ==

openstack-ceilometer-api: inactive (disabled on boot)

openstack-ceilometer-central: active

openstack-ceilometer-compute: inactive (disabled on boot)

openstack-ceilometer-collector: active

openstack-ceilometer-notification: active

== Support services ==

mysqld: inactive (disabled on boot)

dbus: active

target: inactive (disabled on boot)

rabbitmq-server: active

memcached: active

== Keystone users ==

/usr/lib/python2.7/site-packages/keystoneclient/shell.py:64: DeprecationWarning: The keystone CLI is deprecated in favor of python-openstackclient. For a Python library, continue using python-keystoneclient.

'python-keystoneclient.', DeprecationWarning)

/usr/lib/python2.7/site-packages/keystoneclient/v2_0/client.py:145: DeprecationWarning: Constructing an instance of the keystoneclient.v2_0.client.Client class without a session is deprecated as of the 1.7.0 release and may be removed in the 2.0.0 release.

'the 2.0.0 release.', DeprecationWarning)

/usr/lib/python2.7/site-packages/keystoneclient/v2_0/client.py:147: DeprecationWarning: Using the 'tenant_name' argument is deprecated in version '1.7.0' and will be removed in version '2.0.0', please use the 'project_name' argument instead

super(Client, self).__init__(**kwargs)

/usr/lib/python2.7/site-packages/debtcollector/renames.py:45: DeprecationWarning: Using the 'tenant_id' argument is deprecated in version '1.7.0' and will be removed in version '2.0.0', please use the 'project_id' argument instead

return f(*args, **kwargs)

/usr/lib/python2.7/site-packages/keystoneclient/httpclient.py:371: DeprecationWarning: Constructing an HTTPClient instance without using a session is deprecated as of the 1.7.0 release and may be removed in the 2.0.0 release.

'the 2.0.0 release.', DeprecationWarning)

/usr/lib/python2.7/site-packages/keystoneclient/session.py:140: DeprecationWarning: keystoneclient.session.Session is deprecated as of the 2.1.0 release in favor of keystoneauth1.session.Session. It will be removed in future releases.

DeprecationWarning)

/usr/lib/python2.7/site-packages/keystoneclient/auth/identity/base.py:56: DeprecationWarning: keystoneclient auth plugins are deprecated as of the 2.1.0 release in favor of keystoneauth1 plugins. They will be removed in future releases.

'in future releases.', DeprecationWarning)

+----------------------------------+------------+---------+----------------------+

| id | name | enabled | email |

+----------------------------------+------------+---------+----------------------+

| addd749374bb40d4bbebbc283ec27c53 | admin | True | root@localhost |

| 5fca74ac284e4bfbb0b8a8909f2d3140 | aodh | True | aodh@localhost |

| 7edf532787fc4f60a04d815f7b9ddaf8 | ceilometer | True | ceilometer@localhost |

| 4a728cb403ec49d8b63f8b5b9c3e4bcf | cinder | True | cinder@localhost |

| b51e258489fc4179bbdbb6b414f7a64c | glance | True | glance@localhost |

| 5f0bc436abc94f6d859ea35fe616b326 | gnocchi | True | gnocchi@localhost |

| 177eef6cd60640fa8fcfa2087993f3a2 | neutron | True | neutron@localhost |

| 7686a4c862504a55bef975365ae7047d | nova | True | nova@localhost |

| af9881187f604e05a527b5cea0c75c18 | swift | True | swift@localhost |

+----------------------------------+------------+---------+----------------------+

== Glance images ==

+----+------+

| ID | Name |

+----+------+

+----+------+

== Nova managed services ==

+----+------------------+------------+----------+---------+-------+----------------------------+-----------------+

| Id | Binary | Host | Zone | Status | State | Updated_at | Disabled Reason |

+----+------------------+------------+----------+---------+-------+----------------------------+-----------------+

| 4 | nova-cert | controller | internal | enabled | up | 2016-08-13T18:39:01.000000 | - |

| 5 | nova-consoleauth | controller | internal | enabled | up | 2016-08-13T18:39:08.000000 | - |

| 6 | nova-scheduler | controller | internal | enabled | up | 2016-08-13T18:39:09.000000 | - |

| 7 | nova-conductor | controller | internal | enabled | up | 2016-08-13T18:39:09.000000 | - |

| 11 | nova-compute | compute | nova | enabled | up | 2016-08-13T18:39:06.000000 | - |

+----+------------------+------------+----------+---------+-------+----------------------------+-----------------+

== Nova networks ==

+----+-------+------+

| ID | Label | Cidr |

+----+-------+------+

+----+-------+------+

== Nova instance flavors ==

+----+-----------+-----------+------+-----------+------+-------+-------------+-----------+

| ID | Name | Memory_MB | Disk | Ephemeral | Swap | VCPUs | RXTX_Factor | Is_Public |

+----+-----------+-----------+------+-----------+------+-------+-------------+-----------+

| 1 | m1.tiny | 512 | 1 | 0 | | 1 | 1.0 | True |

| 2 | m1.small | 2048 | 20 | 0 | | 1 | 1.0 | True |

| 3 | m1.medium | 4096 | 40 | 0 | | 2 | 1.0 | True |

| 4 | m1.large | 8192 | 80 | 0 | | 4 | 1.0 | True |

| 5 | m1.xlarge | 16384 | 160 | 0 | | 8 | 1.0 | True |

+----+-----------+-----------+------+-----------+------+-------+-------------+-----------+

== Nova instances ==

+----+------+-----------+--------+------------+-------------+----------+

| ID | Name | Tenant ID | Status | Task State | Power State | Networks |

+----+------+-----------+--------+------------+-------------+----------+

+----+------+-----------+--------+------------+-------------+----------+Note: above status shows Deprecation Warnings (for deprecated commands to be removed from CLI), which do not impact OpenStack functionality and may be neglected

Verify Neutron agent list:

[root@controller ~(keystone_admin)]# neutron agent-list

+--------------------------------------+--------------------+---------+-------------------+-------+----------------+---------------------------+

| id | agent_type | host | availability_zone | alive | admin_state_up | binary |

+--------------------------------------+--------------------+---------+-------------------+-------+----------------+---------------------------+

| 4ab7a9fd-949f-435f-91e9-c0bce7b0a67a | L3 agent | network | nova | :-) | True | neutron-l3-agent |

| 80fd1818-383d-4cff-8c6b-1434462d53e0 | Metadata agent | network | | :-) | True | neutron-metadata-agent |

| 98b4feb0-1b4b-473d-8bc5-b76db9f2b4e5 | DHCP agent | network | nova | :-) | True | neutron-dhcp-agent |

| ac9325c5-0d1f-4b7c-b6fa-c8aa4d647465 | Metering agent | network | | :-) | True | neutron-metering-agent |

| c19fa020-a256-4ad3-806c-6aa0ecb16356 | Open vSwitch agent | compute | | :-) | True | neutron-openvswitch-agent |

| dd1d1324-7abc-435d-8a3c-15cf9b1279e5 | Open vSwitch agent | network | | :-) | True | neutron-openvswitch-agent |

+--------------------------------------+--------------------+---------+-------------------+-------+----------------+---------------------------+Verify host list:

[root@controller ~(keystone_admin)]# nova host-list

+------------+-------------+----------+

| host_name | service | zone |

+------------+-------------+----------+

| controller | cert | internal |

| controller | consoleauth | internal |

| controller | scheduler | internal |

| controller | conductor | internal |

| compute | compute | nova |

+------------+-------------+----------+ Verify service list:

[root@controller ~(keystone_admin)]# nova service-list

+----+------------------+------------+----------+---------+-------+----------------------------+-----------------+

| Id | Binary | Host | Zone | Status | State | Updated_at | Disabled Reason |

+----+------------------+------------+----------+---------+-------+----------------------------+-----------------+

| 4 | nova-cert | controller | internal | enabled | up | 2016-08-13T20:51:45.000000 | - |

| 5 | nova-consoleauth | controller | internal | enabled | up | 2016-08-13T20:51:45.000000 | - |

| 6 | nova-scheduler | controller | internal | enabled | up | 2016-08-13T20:51:45.000000 | - |

| 7 | nova-conductor | controller | internal | enabled | up | 2016-08-13T20:51:45.000000 | - |

| 11 | nova-compute | compute | nova | enabled | up | 2016-08-13T20:51:45.000000 | - |

+----+------------------+------------+----------+---------+-------+----------------------------+-----------------+9. Test GlusterFS integration with OpenStack

Controller node mounts redundant GlusterFS Storage on it’s filesystem using glusterfs-fuse driver. GlusterFS Volume mount point can be verified using the below command:

[root@controller ~(keystone_admin)]# df -hT | grep glusterfs

192.168.2.35:/gluster_volume_0 fuse.glusterfs 10G 34M 10G 1% /var/lib/cinder/mnt/50e4195bf74921263ed45cc595ce60d7Now let’s create Volume (test_volume, 2GiB size) to test our Cinder Backend (GlusterFS Storage):

[root@controller ~(keystone_admin)]# cinder create 2 --display-name test_volume

+--------------------------------+--------------------------------------+

| Property | Value |

+--------------------------------+--------------------------------------+

| attachments | [] |

| availability_zone | nova |

| bootable | false |

| consistencygroup_id | None |

| created_at | 2016-08-13T21:21:45.000000 |

| description | None |

| encrypted | False |

| id | f7eede8f-4a1d-4a0e-8ae8-a84f8a773eed |

| metadata | {} |

| migration_status | None |

| multiattach | False |

| name | test_volume |

| os-vol-host-attr:host | None |

| os-vol-mig-status-attr:migstat | None |

| os-vol-mig-status-attr:name_id | None |

| os-vol-tenant-attr:tenant_id | aee428c95e55438181d9bf632478f971 |

| replication_status | disabled |

| size | 2 |

| snapshot_id | None |

| source_volid | None |

| status | creating |

| updated_at | None |

| user_id | addd749374bb40d4bbebbc283ec27c53 |

| volume_type | None |

+--------------------------------+--------------------------------------+Check, if volume was created successfully:

[root@controller ~(keystone_admin)]# cinder list

+--------------------------------------+-----------+-------------+------+-------------+----------+-------------+

| ID | Status | Name | Size | Volume Type | Bootable | Attached to |

+--------------------------------------+-----------+-------------+------+-------------+----------+-------------+

| f7eede8f-4a1d-4a0e-8ae8-a84f8a773eed | available | test_volume | 2 | - | false | |

+--------------------------------------+-----------+-------------+------+-------------+----------+-------------+Now login to both Gluster Peers to ensure, that the volume was created successfully on Gluster Peer Bricks:

[root@glusterfs1 ~]# gluster volume info

Volume Name: gluster_volume_0

Type: Replicate

Volume ID: b88771d3-bf16-4526-b00c-b8a2bd5d1a3f

Status: Started

Number of Bricks: 1 x 2 = 2

Transport-type: tcp

Bricks:

Brick1: 192.168.2.35:/glusterfs/brick1/gluster_volume_0

Brick2: 192.168.2.36:/glusterfs/brick1/gluster_volume_0

Options Reconfigured:

performance.readdir-ahead: on[root@glusterfs1 ~]# ls -l /glusterfs/brick1/gluster_volume_0/

total 712

-rw-rw-rw-. 2 root root 2148073472 Aug 13 23:22 volume-f7eede8f-4a1d-4a0e-8ae8-a84f8a773eed[root@glusterfs2 ~]# ls -l /glusterfs/brick1/gluster_volume_0/

total 712

-rw-rw-rw-. 2 root root 2148073472 Aug 13 23:22 volume-f7eede8f-4a1d-4a0e-8ae8-a84f8a773eedThe output from above commands shows that the volume ID in Cinder (f7eede8f-4a1d-4a0e-8ae8-a84f8a773eed) matches the volume IDs on both Gluster Peers (volume-f7eede8f-4a1d-4a0e-8ae8-a84f8a773eed).

Now it’s time to create Project Tenant and launch OpenStack Instances in our newly created OpenStack Mitaka cloud.

If I want to deploy with more than one compute node , then what all changes are required ?

I think I need add IP of compute node in “ONFIG_COMPUTE_HOSTS=192.168.2.12”

Yes, exactly.

I am trying it. Last time I tried and br-eno4 was not created in my compute node.

Hi Shailendra

Be aware that on Mitaka you need to configure more parameters in answer file:

CONFIG_NEUTRON_OVS_BRIDGE_MAPPINGS=physnet1:br-eth1

CONFIG_NEUTRON_OVS_BRIDGE_IFACES=br-eth1:eth1

CONFIG_NEUTRON_OVS_BRIDGES_COMPUTE=br-eth1

check this issue:

https://bugzilla.redhat.com/show_bug.cgi?id=1338012

…maybe this will help you.

link not working – How to Install and Configure GlusterFS Storage on CentOS 7 Servers

I fixed it, now it’s working.

Thanx for remark!

Hi,

I tried with following.

[root@openstck-controller ~(keystone_admin)]# grep CONFIG_NEUTRON_OVS_BRIDGE answer-file-2016-090-28.txt | grep -v ^#

CONFIG_NEUTRON_OVS_BRIDGE_MAPPINGS=physnet1:br-eno2

CONFIG_NEUTRON_OVS_BRIDGE_IFACES=br-eno2:eno2

CONFIG_NEUTRON_OVS_BRIDGES_COMPUTE=br-eno2

[root@openstck-controller ~(keystone_admin)]#

Now I am getting error in VM’s created. It’s not getting IP address.

Hi

This part of configuration you presented looks OK.

What is the output of the below command?

[root@network ~]# systemctl status neutron-dhcp-agent.service

This service is running :

● neutron-dhcp-agent.service – OpenStack Neutron DHCP Agent

Loaded: loaded (/usr/lib/systemd/system/neutron-dhcp-agent.service; enabled; vendor preset: disabled)

Active: active (running) since Tue 2016-10-04 03:28:05 EDT; 1 weeks 5 days ago

Main PID: 24758 (neutron-dhcp-ag)

CGroup: /system.slice/neutron-dhcp-agent.service

├─24758 /usr/bin/python2 /usr/bin/neutron-dhcp-agent –config-file /usr/share/neutron/neutron-dist.conf –config-file /etc/neutron/neutron.conf –config-fil…

├─24805 sudo neutron-rootwrap-daemon /etc/neutron/rootwrap.conf

├─24806 /usr/bin/python2 /usr/bin/neutron-rootwrap-daemon /etc/neutron/rootwrap.conf

└─56655 dnsmasq –no-hosts –no-resolv –strict-order –except-interface=lo –pid-file=/var/lib/neutron/dhcp/e71986db-723f-45ea-be74-795e912a52d4/pid –dhcp…

Oct 04 07:43:15 network-node dnsmasq[24862]: read /var/lib/neutron/dhcp/e71986db-723f-45ea-be74-795e912a52d4/addn_hosts – 5 addresses

Oct 04 07:43:15 network-node dnsmasq-dhcp[24862]: read /var/lib/neutron/dhcp/e71986db-723f-45ea-be74-795e912a52d4/host

Oct 04 07:43:15 network-node dnsmasq-dhcp[24862]: read /var/lib/neutron/dhcp/e71986db-723f-45ea-be74-795e912a52d4/opts

Oct 13 05:03:19 network-node dnsmasq[56655]: started, version 2.66 cachesize 150

Oct 13 05:03:19 network-node dnsmasq[56655]: compile time options: IPv6 GNU-getopt DBus no-i18n IDN DHCP DHCPv6 no-Lua TFTP no-conntrack ipset auth

Oct 13 05:03:19 network-node dnsmasq[56655]: warning: no upstream servers configured

Oct 13 05:03:19 network-node dnsmasq-dhcp[56655]: DHCP, static leases only on 192.168.20.0, lease time 1d

Oct 13 05:03:19 network-node dnsmasq[56655]: read /var/lib/neutron/dhcp/e71986db-723f-45ea-be74-795e912a52d4/addn_hosts – 5 addresses

Oct 13 05:03:19 network-node dnsmasq-dhcp[56655]: read /var/lib/neutron/dhcp/e71986db-723f-45ea-be74-795e912a52d4/host

Oct 13 05:03:19 network-node dnsmasq-dhcp[56655]: read /var/lib/neutron/dhcp/e71986db-723f-45ea-be74-795e912a52d4/opts

[root@network-node ~]#

Hi Shailendra

Another thing that comes to my mind i s that You can have eth1 (internal communication network interface) DOWN on Compute node and/or eth1 interface not attached to br-eth1 OVS bridge. Verify it.

Hi Grzegorz,

What version of Gluster did you use for this deployment?

Hi Teik

I used version: 3.7.6

[root@glusterfs1 ~]# glusterfs -V

glusterfs 3.7.6 built on Nov 9 2015 15:20:23

Repository revision: git://git.gluster.com/glusterfs.git

Copyright (c) 2006-2013 Red Hat, Inc. <http://www.redhat.com/>

GlusterFS comes with ABSOLUTELY NO WARRANTY.

It is licensed to you under your choice of the GNU Lesser

General Public License, version 3 or any later version (LGPLv3

or later), or the GNU General Public License, version 2 (GPLv2),

in all cases as published by the Free Software Foundation.

I have already installed the RDO repository of liberty is what it is possible to remove and installed the referential of mitaka and how the how to do it

Hello

I installed openstack mitaka

But if I click on one of its elements (image, network, instances, volumes, router etc …)

Here is the error that I have:

Error: Could not retrieve usage information.

Help me …